Performance tuning in asynchronous languages can be complicated.

Back in the day when most languages didn’t support asynchronous functions, and you could follow the control flow simply by reading the code top to bottom, performance tuning was simple. To speed up a process, you had only a few options: make fewer function calls, make the function calls faster, or get better hardware.

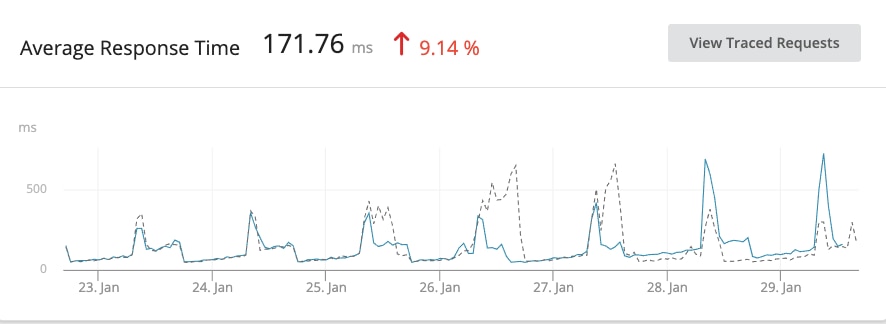

Now that we’re living in a world where we might process high volumes of requests through asynchronous runtimes, it can be a bit more complicated. If you have an asynchronous web application and it’s slow, you first need to understand what part of it is slow, and that can be a difficult process when you can’t simply read the code from top to bottom (enter SolarWinds® AppOptics™).

Callback Architecture is Important

To understand what is slow and how we might be able to improve the response time of our web app, we need to look at the execution flow. Enter the callback. For the purpose of this blog, let’s take a look at an example endpoint that contains the following logic.

Example transaction logic:

- User-facing endpoint, for searching hotels

- Endpoint makes three service calls to pull hotel data

- Endpoint makes one service call that posts analytics data to a CRM for sales

- Returns list of hotels matching the user’s search criteria

Let’s take a look at what that code looks like, written out from a synchronous perspective.

require('appoptics-apm');

const express = require('express');

const app = express();

const request = require('request');

app.get('/', function (req,res) {

service_call_1 = request('http://localhost:8082/api-v1/hotels', { json: true });

service_call_2 = request('http://localhost:8082/api-v1/hotels', { json: true });

service_call_3 = request('http://localhost:8082/api-v1/hotels', { json: true });

analytics_payload = {'tags': {'environment': 'test'}, 'measurements': [{'name': 'anayltics', 'value': 5}]} // random value to analytics

request.post({uri: 'https://api.examplecrm.com/v1/measurements', json: true, auth:{'user': 'test_user', 'pass': ''}, 'body': analytics_payload})

res.send('Hello World');

})

app.listen(3000, () => console.log('Example app listening on port 3000!'))

This code looks good, and it checks all the boxes of our logic above. So what does the execution look like?

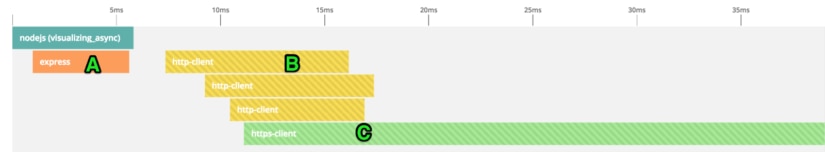

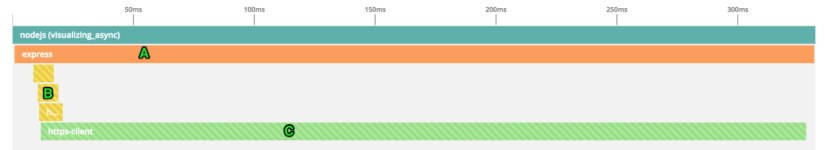

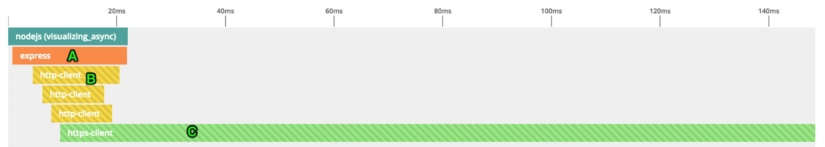

Well, it technically did all the things we wanted it to, but not exactly in the right order. The service calls happened at seemingly random times (B), and the response was actually returned to the user (A) before we had the data ready from the service calls. If this code was completely synchronous, it would have been fine, but since it is asynchronous, we have to be more mindful of the control flow.

Visualizing Callbacks

The point of this was to talk about callbacks in Node.js, so let’s get into it. In the code example above, we let the async nature of node run wild and it didn’t end well. Callbacks are a basic part of async architecture, and they enable us to control the order of execution.

In our example above, we’re using the request module to make service calls. Like many modules/functions, request is designed to take a callback as the last argument. What does this do? At a basic level, this means that the request prototype will do the normal work of the function (make an HTTP call) and once that work is complete, it will execute the callback. The callback can be any function, and you can define that function inline.

Now that we know how callbacks work, let’s put it into action with our example code from before. This time let’s keep in mind that we want to finish all the service calls and analytics tracking before returning to the user!

//https://github.com/adam-hert/visualizing_async/blob/callback_hell/server.js

require('appoptics-apm')

const express = require('express')

const app = express()

const request = require('request');

app.get('/', function (req,res){

service_call_1 = request('http://localhost:8082/api-v1/hotels', { json: true }, function (e,r,body){

service_call_2 = request('http://localhost:8082/api-v1/hotels', { json: true }, function(e,r,body){

service_call_3 = request('http://localhost:8082/api-v1/hotels', { json: true }, function (e,r,body){

analytics_payload = {'tags': {'environment': 'test'}, 'measurements': [{'name': 'anayltics', 'value': 5}]} // random value to analytics

request.post({uri: 'https://api.examplecrm.com/v1/measurements',json: true, auth:{'user':'test_user', 'pass':''}, 'body':analytics_payload}, function (e,r,body){

res.send('Hello World');

});

});

});

});

});

app.listen(3000, () => console.log('Example app listening on port 3000!'))

Great! We have checked all the boxes, and returned the correct content to the user—ship it! Looking at the execution of the code, it’s no better than synchronous code because it is executed sequentially. What was the point of moving to node again? We could have done this in PHP…

The way we designed the callbacks, they might as well be synchronous because each waits on the previous one to complete, and we experience no benefits of javascript’s non-blocking nature. It’s up to us to design the callbacks to only block on what we need to. Let’s take another pass at this. The three service calls we make to fetch data aren’t dependent on each other, so let’s detach them a bit.

require('appoptics-apm');

const express = require('express');

const app = express();

const request = require('request');

var tasks_left = 0;

app.get('/', function (req,res){

tasks_left = 4;

service_call_1 = request('http://localhost:8082/api-v1/hotels', { json: true }, function(error,response,body){

return_res(req,res)

});

service_call_2 = request('http://localhost:8082/api-v1/hotels', { json: true }, function(error,response,body){

return_res(req,res)

});

service_call_3 = request('http://localhost:8082/api-v1/hotels', { json: true }, function(error,response,body){

return_res(req,res)

});

analytics_payload = {'tags': {'environment': 'test'}, 'measurements': [{'name': 'anayltics', 'value': 5}]}; // random value to analytics

request.post({uri: 'https://api.examplecrm.com/v1/measurements',json: true, auth:{'user':'test_user', 'pass':''}, 'body':analytics_payload}, function(error,response,body){

return_res(req,res)

});

})

var return_res = function (req,res){

tasks_left -= 1;

console.log(tasks_left);

if (tasks_left == 0) {

res.send('Hello World');

}

};

app.listen(3000, () => console.log('Example app listening on port 3000!'));

Great success!

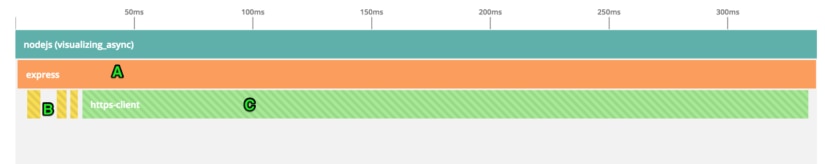

We’ve serialized the service calls, and our response time is slightly better! Are we done? Is this the best we can do? The analytics work (C), where we are pushing data to a CRM for sales is taking quite a long time. Does it make sense to make the user wait for their hotel data just because our CRM is slow? Probably not. Let’s re-architecture things one more time, and not block for the analytics call to return.

require('appoptics-apm');

const express = require('express');

const app = express();

const request = require('request');

var tasks_left = 0;

app.get('/', function (req,res){

tasks_left = 3;

service_call_1 = request('http://localhost:8082/api-v1/hotels', { json: true }, function(error,response,body){

return_res(req,res);

});

service_call_2 = request('http://localhost:8082/api-v1/hotels', { json: true }, function(error,response,body){

return_res(req,res);

});

service_call_3 = request('http://localhost:8082/api-v1/hotels', { json: true }, function(error,response,body){

return_res(req,res);

});

analytics_payload = {'tags': {'environment': 'test'}, 'measurements': [{'name': 'analytics', 'value': 5}]} // random value to analytics

request.post({uri: 'https://api.examplecrm.com/v1/measurements',json:true, auth:{'user':'test_user', 'pass':''}, 'body':analytics_payload}, function(error,response,body){

//do nothing

});

});

var return_res = function (req,res){

tasks_left -=1;

console.log(tasks_left);

if (tasks_left == 0){

res.send('Hello World');

}

};

app.listen(3000, () => console.log('Example app listening on port 3000!'));

Even better!

Now that we have used Node.js callbacks to control the execution of our code, we have built the endpoint to the specifications, and optimized the work being done to serialize things that are not dependent, and deferred some non-critical work to after the response is returned!

Visualizing Callbacks in SolarWinds AppOptics

All of the visualizations above came from SolarWinds® AppOptics™ distributed tracing platform, which supports a broad set of languages. One of its use cases is monitoring and tracing for Node.js applications. If you want to take a look at the execution of your own code, sign up for a trial: www.appoptics.com

The SolarWinds trademarks, service marks, and logos are the exclusive property of SolarWinds Worldwide, LLC or its affiliates. All other trademarks are the property of their respective owners.