Announcing Live Code Profiling in AppOptics

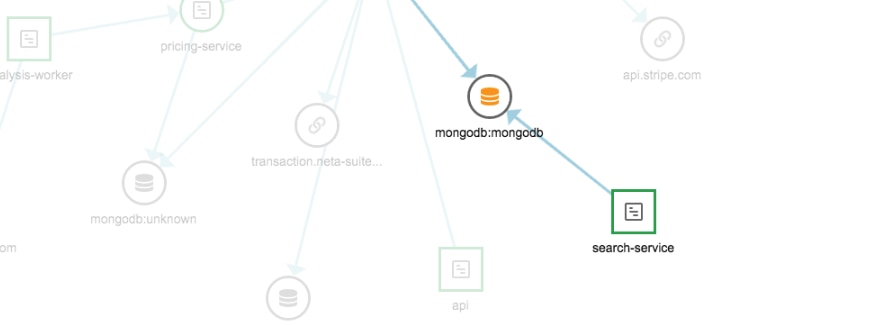

SolarWinds® AppOptics™ is known for its distributed tracing technology, allowing users to solve performance issues in complex systems. With a focus on distributed applications, there was less insight into compute-intensive systems, and the underlying functions that can lead to a performance issue. With the release of live code profiling, there is no longer a gap on the function level. A simple agent install now allows full visibility into not only which system is attributing to latency, but also which function or method on that system is the culprit.

What is Live Code Profiling?

Live code profiling provides a breakdown of the most frequently-called functions and methods in a transaction. Profiling is gathered in a manner tailored to each language, and typically includes information down to the class, method, and even filename and line number. It provides enough detail to understand what line of code is causing a performance issue, and includes the information needed to quickly find the relevant section in the source code.

What is it Used For?

This capability was built due to popular demand from customers, and the use cases are endless, yet simple.

Can’t tell if a slow transaction is caused by the framework, third-party library, or your own “artisanal” slow code?

Live code profiling will break down these components to identify exactly where the bottleneck is, reducing the amount of troubleshooting and headaches.

Have a performance issue due to a regression in a new release?

Live code profiling enables you to identify the slow method that was introduced, speeding up the turnaround time and allowing the feature to get out faster. Run AppOptics in staging and catch the issue before it even hits production to avoid this situation all together.

Are Tracing and Profiling the Same Thing?

Traditionally, profiling and tracing have been separate. Teams may have used a profiling tool during the development process to optimize a function, then a tracing tool in production to identify I/O issues such as database calls and cross-service bottlenecks. In AppOptics, tracing and profiling are combined, enabling you to find a slow service, and then a slow function that only occurred in the real world.

How Does it Work? Does it Increase Overhead?

AppOptics is focused on performance and providing function-level detail while keeping the overhead low, which is not an easy task. AppOptics was able to achieve this by using statistical profiling, coupled with an adaptive sample rate. Put another way, when in a high-load environment, it won’t run on every transaction, and even when it does run, the overhead is kept low.

What Languages are Supported?

Java and PHP are available now, with other languages in beta (request access here!). In addition to function level information, Java highlights when a thread is busy due to other circumstances such as garbage collection. Use the new JMX dashboard in AppOptics to understand the correlation between garbage collection and application performance.

Full Visibility

Is application performance related to infrastructure? If a service is slow, and all of the functions are slow, the next logical step is to look at the health of the underlying infrastructure. AppOptics enables you to quickly jump from a service context to the infrastructure running that service. The next time a pager wakes you up in the night due to a latency threshold or a backed-up queue, have the information available to confidently right size the infrastructure behind it.

All AppOptics accounts now have access to code profiling. If you aren’t yet using AppOptics to monitor system performance, you can free sign up for a free trial account here.